The Wonderful Curse of Graphical User Interfaces (Part 01)

Part 01: Can't Escape History

This series is based on a lengthy video essay I made about a year ago. In this series, I’ll be expanding on some key ideas of the Graphical User Interface history, diving deep into its origins, why things are the way they are, and the contradictions around GUIs in general. If you prefer to watch (with a bit of unfortunate background music choices but really cool videos, you can click here).

Introduction

It took nearly 40 years for established norms and conventions to govern human-computer interaction. Today, virtually every computer comes with a keyboard and mouse (or trackpad) as primary input devices along with a screen that has windows, icons, and apps. Why is that?

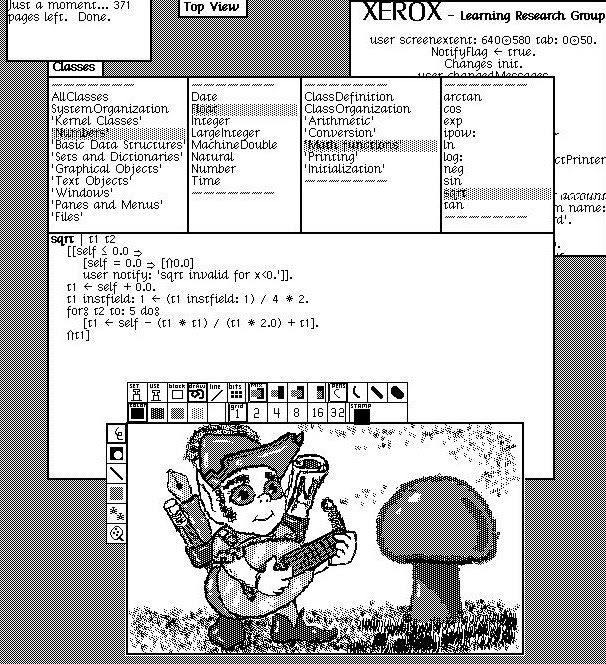

Most common computer actions—visiting and browsing websites, using software, filling out forms—rely on what the industry calls a graphical user interface (GUI), based on the WIMP (Windows, Icons, Menus, Pointers) paradigm.

This paradigm has also been adapted for smartphones and tablets, albeit with adjustments for screen size and touch interactions (gestures replacing mouse and keyboard, though WIMP principles are now reappearing in these devices like on iPad and some Samsung phones).

While the underlying WIMP framework persists, the "evolving aesthetics" and progressive enhancements of each environment—from the web, macOS, and Windows to iOS and Android—create the illusion of unique frameworks when it comes to interactions.

Fundamentally, though, much remained unchanged.

The GUI, however, is a relatively recent development. Its emergence is rooted in a complex history shaped by global conflict, government and corporate research, evolving conceptions of the computer's purpose and users, and the contributions of visionary thinkers.

Today, with the advent of generative AI, the GUI stands at a pivotal point. It's been evolving to accommodate diverse devices and technologies (computers, phones, generative AI, VR headsets, smart speakers, smart glasses, etc.) and interaction methods (keyboard, mouse, voice, and even brainwaves).

Generative AI is particularly significant because its output is redefining interactions. Currently, much of this interaction relies on prompts or sequential natural language commands, enabling tasks from email summarisation to image and video editing. Many startups and projects like Mainframe and Common Knowledge are exploring alternatives, or at least attempting to.

Questions like “If we can simply ask our machines to perform tasks, what's the point of a keyboard and mouse? What if we eliminate buttons altogether? What is the purpose of a desktop or an iPhone’s SpringBoard?” are now common, with lots and lots of proposed solution.

But the apparent UI stagnation before generative AI was somewhat artificial. Exploration of alternative interaction methods never ceased. For example, Bret Vector’s work is quite significant in the field

Whether you're a software developer, designer, or an everyday user, It’s probably quite natural to wonder how we’ve arrived at this moment? What have we gained or lost along the way? How do the dominant GUI metaphors (windows, icons, menus, pointers, touch interfaces) shape our interactions with technology? What other possibilities existed? Why did certain ideas flourish while others faded? Why is it a wonderful curse?

This series is a personal exploration of the history and impact of the GUI. It's not an exhaustive history of computing or the GUI itself, but rather an examination of its origins, complexities, and possibly inherent contradictions.

Bear with me as I delve into the backstory, because, like everything touched by modern digital technology, it all begins with "computers."

Can’t Escape History!

This isn't strictly a history of computers, but understanding this history is crucial for grasping how user interfaces came to exist. The core function of computers was calculation, and it took considerable effort to shift our thinking about these machines from purely calculation-focused tools to more abstract devices capable of pretty much everything we want it to. This involved rethinking the very meaning of "calculation" and exploring the broader possibilities it could unlock.

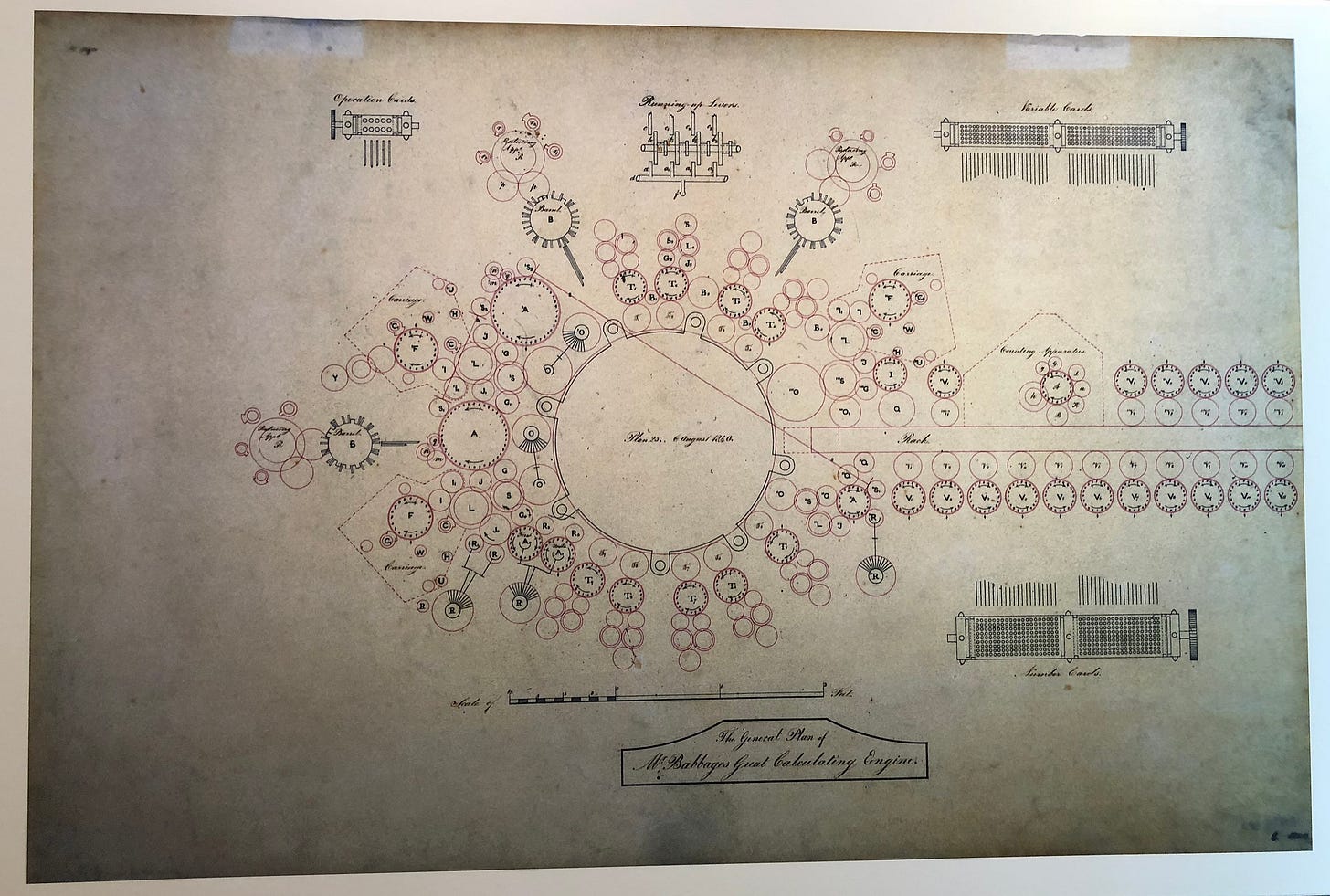

The term “computer” originally referred to individuals who performed manual calculations, a role deeply rooted in history. This changed with the emergence of groundbreaking mechanical innovations. A pivotal figure in this shift was Charles Babbage, who in the 1830s conceived the idea of the Analytical Engine.

Babbage’s concept envisioned a general-purpose calculating machine.

Despite dedicating more than three decades to its development, Babbage never saw the Analytical Engine completed in his lifetime. Some components of the machine were eventually constructed after his death, including efforts by his son.

In this context, Ada Lovelace’s contributions are indispensable. Collaborating closely with Babbage, Lovelace translated an article once about the Analytical Engine and supplemented it with her own extensive notes, which were thrice the length of the original article. Most notably, she is credited with creating what many consider the first computer program—an algorithm for the Analytical Engine to calculate Bernoulli numbers.

Lovelace’s vision extended beyond number crunching; she foresaw the machine’s potential to manipulate any symbolically representable content, including music and art. She envisioned a general-purpose machine! Her work led to the first algorithm intended for such a machine, earning her recognition as one of the earliest computer programmers and a visionary in the field of computing.

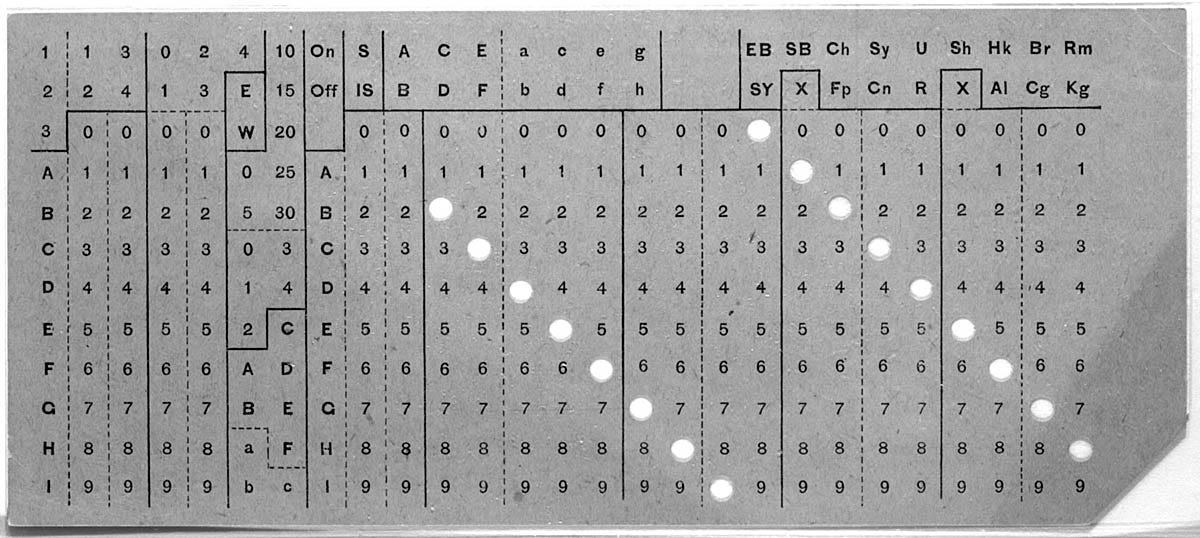

In the 1890s, Herman Hollerith's tabulating machine was significant. Hollerith adapted punch cards—originally used in Jacquard looms—for computation. These cards, made of rigid paper with holes representing data (e.g., age, location), served as a form of input, analogous to how we use a mouse and keyboard today.

In 1936, Alan Turing's groundbreaking work laid the theoretical foundation for modern computing and the development of general-purpose computers. His introduction of the "Turing machine"—a theoretical model of computation involving a simple, abstract machine manipulating symbols on a tape according to a set of rules—provided a precise definition of computation. Furthermore, his concept of the "Universal Turing machine" demonstrated that a single machine could perform any computation, given the appropriate program, a principle crucial to the design of programmable computers.

Then came WWII

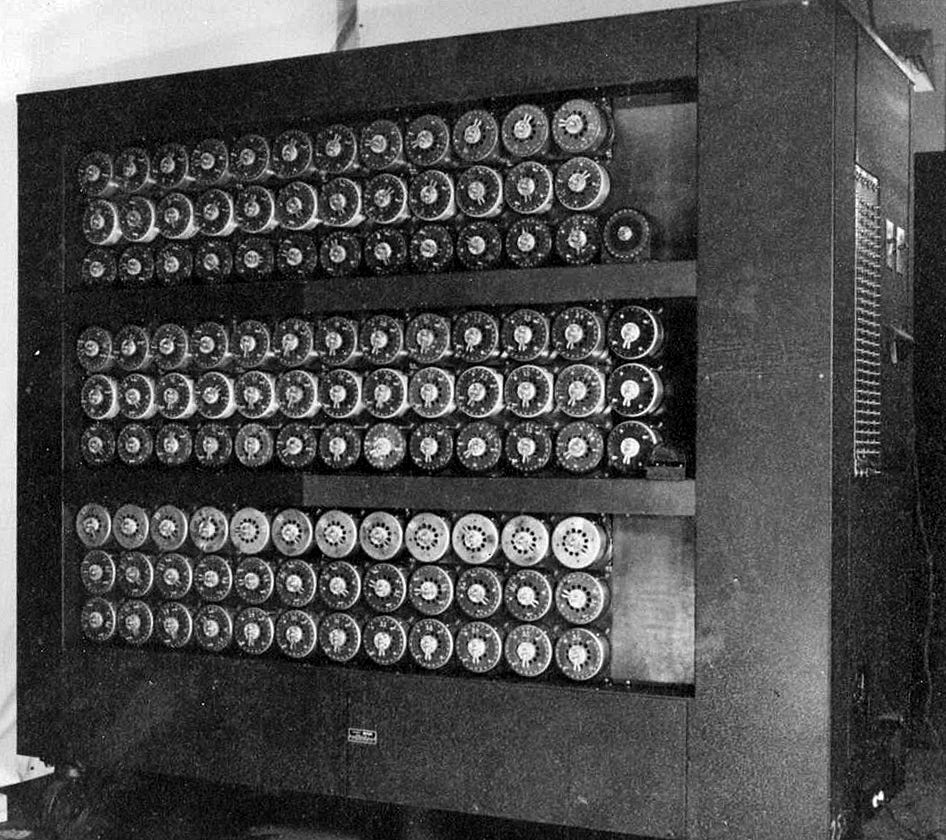

During World War II, Turing theories were practically tested with the Bombe machine, a device pivotal in cryptography and validating his earlier work.

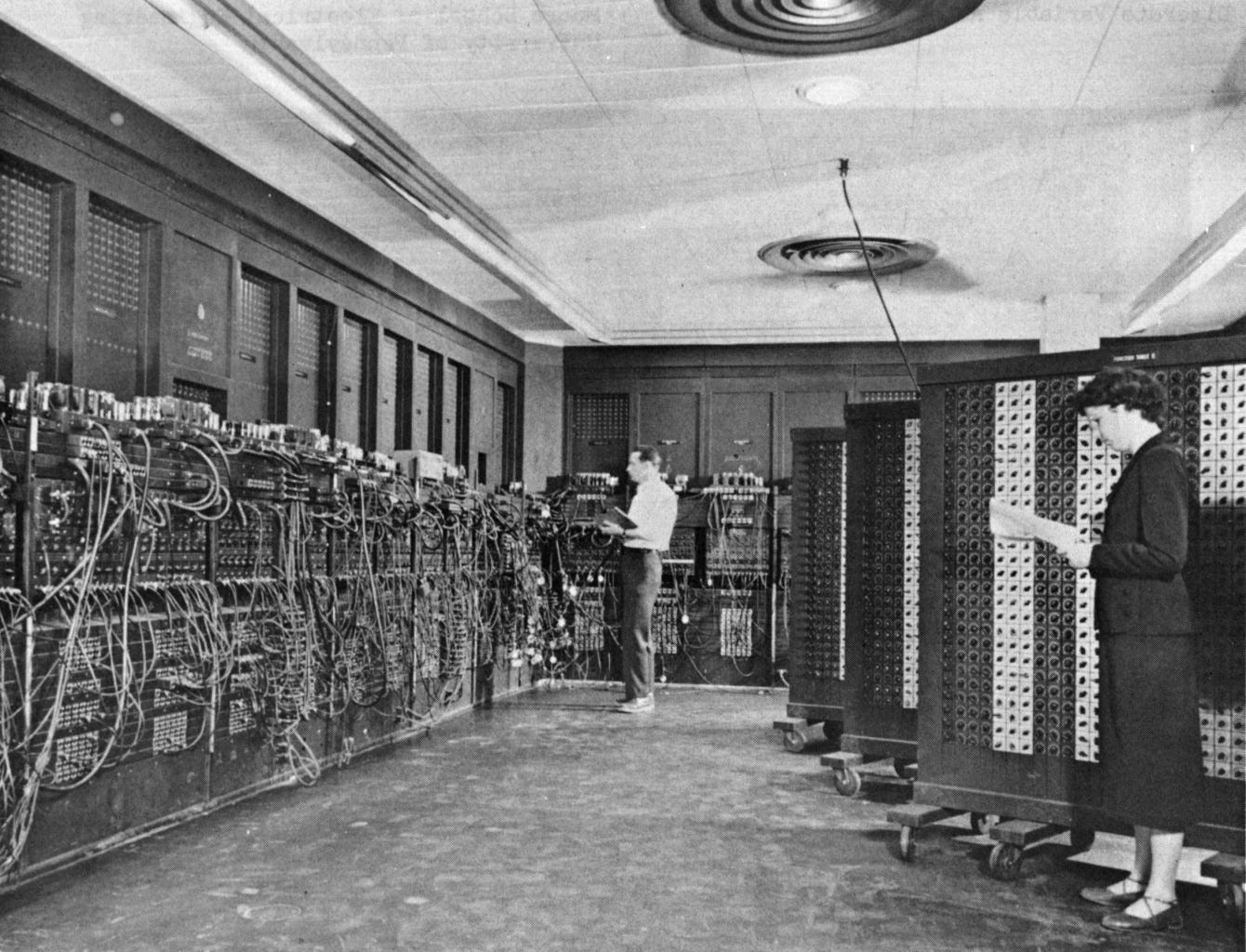

Early mechanical and electronic computers, like the British Bombe and later Colossus, were typically specialised for tasks like code-breaking. The American ENIAC, begun in 1945 and operational after the war, marked a shift toward general-purpose computing. These machines represented a departure from single-purpose devices, ushering in a new era.

The war left two important technological legacies: a newfound appreciation for computing's potential (demonstrated by its role in code-breaking) and a sustained interest in the behavioral aspects of design, notably engineering psychology. This discipline emerged to address design flaws in military equipment that led to significant losses. Engineering psychology focused on matching people to jobs, training them effectively, and designing more intuitive and user-friendly equipment (a precursor to User Experience Design). This manifested in the efforts to enhance The B-17 Flying Fortress.

Why this matters?

Early visions of computing—as symbolic and creative rather than purely numeric—were the philosophical basis for treating the computer as a medium, not just a tool. This is something that will be always explored. The shift to general-purpose computing demanded new ways for users to tell machines what to do—planting the seed for modern interfaces.

Then comes the screen…

Concurrent with other technological advancements, cathode-ray tube (CRT) technology matured significantly during World War II. Originating with Karl Ferdinand Braun in 1897, CRTs became essential to radar systems by the 1930s and 40s. They provided, for the first time, a real-time electronic display of real-world data, setting the stage for screen-based computing in the 1950s

The Williams tube, an early form of electronic data storage, was pivotal. It was a key component of early computers like the Manchester Baby (operational in 1948).

After the War: Vannevar Bush and the Memex Machine

After World War II, engineers and scientists shifted focus to civilian and technological challenges, particularly the growing "information explosion."

Vannevar Bush, with his influential 1945 essay "As We May Think," was central to this shift.

Bush conceptualized the Memex, a theoretical device to enhance memory and information processing. Often compared to a primitive search engine, the Memex was designed to use microfilm, a leading storage medium at the time. It was a visionary step toward automated information retrieval.

Bush presciently described the potential impact of such machines, suggesting they would significantly augment human thinking and understanding. He envisioned them as transformative, much like mechanical power had previously relieved humans of physical labor.

Consider a future device for individual use, which is a sort of mechanized private file and library. It needs a name, and, to coin one at random, “memex” will do. A memex is a device in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility. It is an enlarged intimate supplement to his memory... Wholly new forms of encyclopedias will appear, ready made with a mesh of associative trails running through them, ready to be dropped into the memex and there amplified.

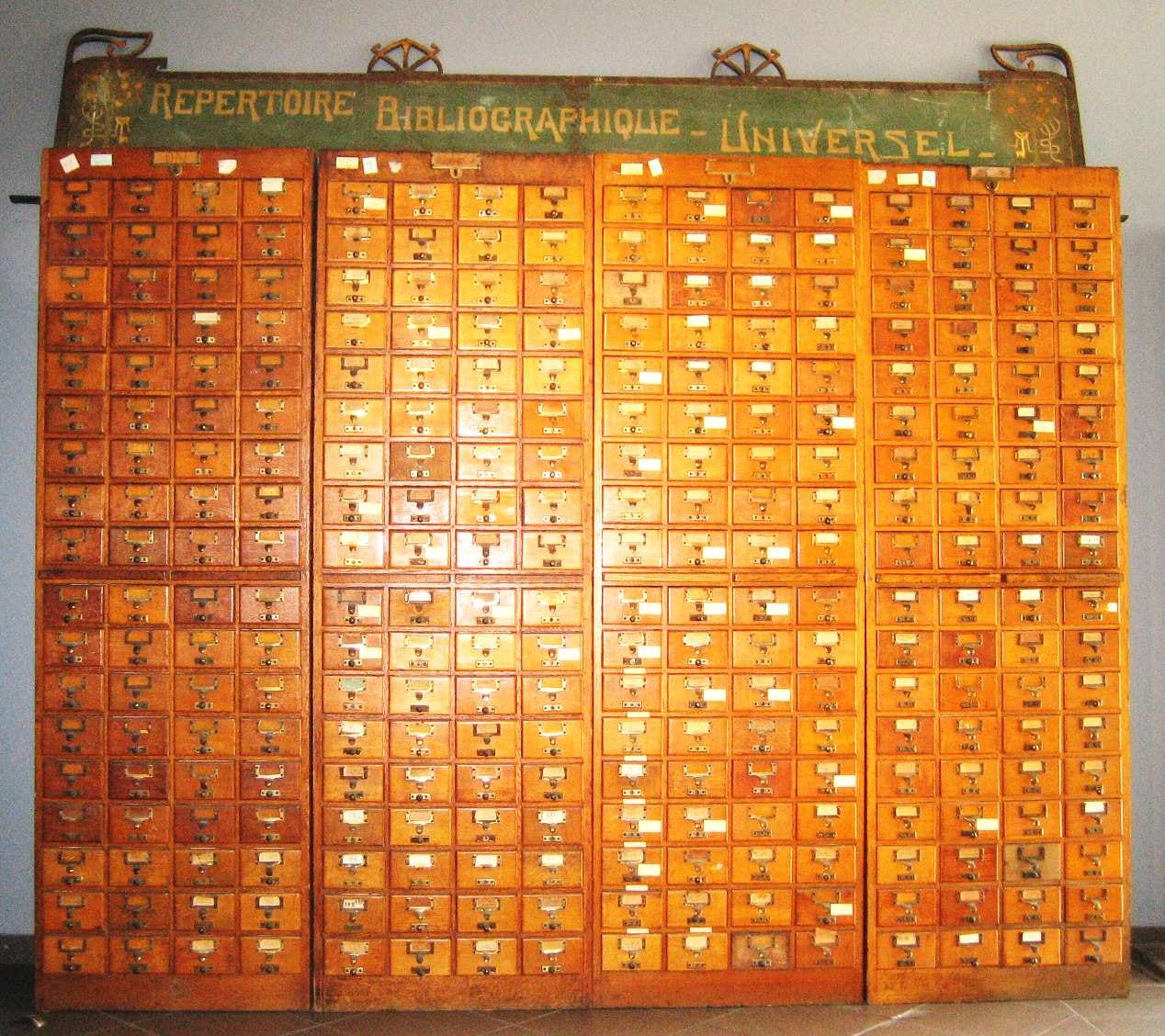

While the Memex is often seen as an early prototype of a global information network, it wasn't the first attempt at large-scale information organisation. Paul Otlet's "Mundaneum," which predated the Memex, used index cards in an ambitious effort to create the first global information network. Otlet's work, though less known, was pioneering in information management and retrieval.

Bush's ideas and the Memex concept spurred numerous revolutionary projects in computing and information technology. These were early visions for the interconnected, information-rich world we live in today.

Cold War and SAGE Computer

The Cold War pushed the boundaries of real-time data processing and interactive computing. IBM's Semi-Automatic Ground Environment (SAGE) system was a major contribution.

In IBM’s 1956 promotional film “On Guard,” the groundbreaking nature of SAGE’s display scope was highlighted. The film described it as “a stationary advance in data processing” and “a computer-generated visual display, on call as needed.”

SAGE offered real-time interactive graphical data representation, a significant leap forward for its time.

The film vividly demonstrated how radar data was displayed on a CRT screen and how operators could interact with this data using a light pen, an early precursor to the modern computer mouse. The light pen’s earliest use dates back to between 1951 and 1955 on the Whirlwind computer, an MIT project and a forerunner of SAGE, where it was used for simple selection tasks.

This approach to the user interface facilitated a new level of interaction between humans and machines. It blended automated data collection with human intuition, transforming users from passive observers to active participants in the computing process. CRT technology became a foundational element in this evolution, featuring in notable machines like the PDP-1, which hosted the first computer game, “Spacewar!” and the PLATO Computer system developed at the University of Illinois.

These milestones in human-computer interaction were the groundwork for future advancements, sparking ideas about the very nature of computers, their intended users, and specialised interaction methods. Throughout all that “Information Explosion” and computers became inseparable concepts.

Why this matters?

The ability to “see” the machine’s output visually introduced the core GUI principle: visual feedback. Light pens and real-time data marked the beginning of interactive computing—where users and machines exist in a shared visual and temporal space. Bush and Otlet’s visions continued to explore computers as cognitive partners, not just calculators—placing UI at the heart of sense-making.

What else can a computer do, who is it for, and why?

We’ll be exploring that in the next issue.

Saleh how do you scope your essays? Like how did you decide what slices of hci history to include and which to discard and then in that decide how much depth was enough to then move onto the next?